Are we reinventing the cloud?

TL;DR; this post is a rant about the unnecessary complexity and duplication that I - so far - didn't see beneficial while running workloads on managed Kubernetes

#Incipit

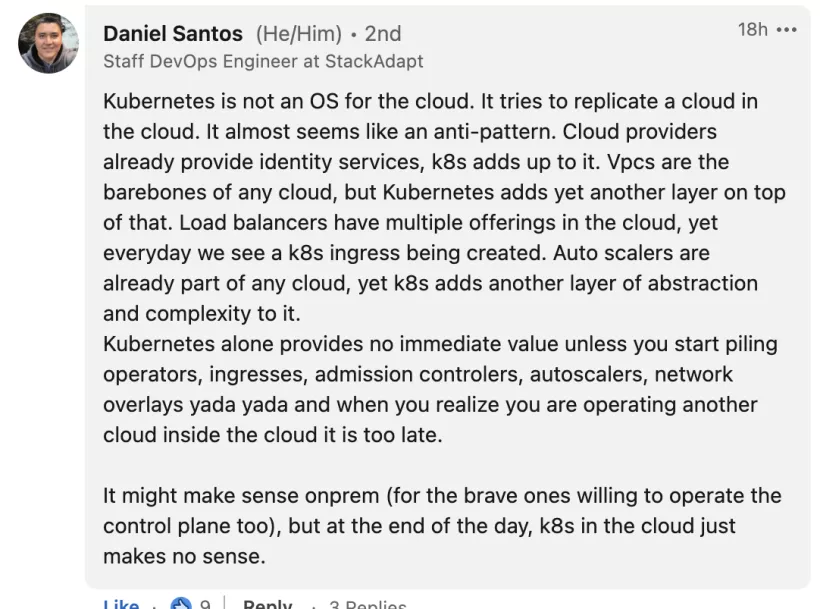

Everything started from this comment on LinkedIn, comment that I support 100%.

original comment here

#Delusional behaviour: Kubernetes everywhere

The more I'm using Kubernetes the more I'm puzzled about the direction we are going.

Kubernetes is an excellent abstraction layer, that can be installed over a pre-existing infrastructure and it implements a robust framework on top of which you can deploy components that will offer the possibility to have the underlying infrastructure "software-defined".

#But, there is a but

When you run on-premise infrastructure, you most likely have a small part software defined (vmware, openstack) but not the entire set of services your workload need. Hence the need of Kubernetes.

Software defined is the core principle of every major cloud provider. And a robust framework is exactly what AWS, Azure, GCP do offer via their own APIs, SDK and CLIs.

#The Duplication

Then let's consider the components you need (yes, you must) to install on top of Kubernetes to offer additional services: Access Control, DNS management, Load Balancing and traffic routing, Deployment Automation, Secrets management to make a few example, but the list is longer and longer.

Actually lets make this list more pragmatic. As an engineer, in order to install and manage a workload on Kubernetes on AWS I have to provision cloud resource and K8S components. For fun I will try to group them together to showcase what I believe are unnecessary duplications.

#IAM

| on AWS | self-managed on K8S |

|---|---|

| IAM Roles | Roles |

| Policies | Role Bindings |

| Trust-Relationships | Service Accounts |

#Networking

| on AWS | self-managed on K8S |

|---|---|

| Security Groups | Network Policies |

| Load Balancer, Target Groups | Ingress Component, Routes |

| DNS Hosted Zone (Route53) | External-DNS Component |

| ACM Certificates | Cert-Manager Component |

Sidenote:

- with Security Groups you represent cloud component as actors, you don't have to deal with IPs (make sense in this era).

- with network policies the above works only if you stay within the realm of K8S but if you need to secure the path toward a cloud managed Database then you need to deal again with IPs.

#Scaling & Elasticity

| on AWS | self-managed on K8S |

|---|---|

| Autoscaling group, Launch Templates | Cluster Autoscaler / Karpenter component |

#Secrets Management

| on AWS | self-managed on K8S |

|---|---|

| Secrets Manager entities | External Secret Operator component, etcd secrets |

#Observability

| on AWS | self-managed on K8S |

|---|---|

| CloudWatch agent | Kube Prometheus Stack, Grafana, Metric Server Components, Logging Agent (fluentd, promtail, ....) |

#Additionally on K8S to make the life easier

| self-managed on K8S |

|---|

| Reloader for config map/secret rotation |

| Trivy for keeping an eye on the potential vulnerability popping up in the running containers |

#Day 2

Now we have to maintain the infrastructure up to date with the new functionalities coming from the Cloud Provider (AWS SDK, deprecations, innovations) but also we - additionally - need to:

- Periodically update Kubernetes Control Plane

- Carefully rotate the Kubernetes Nodes when the time comes (hopefully the workload has Pod Disruption Budget)

- Check for vulnerability each one of the images for the self-managed components

- Periodically check for un-compatible API version of the K8S manifests with upcoming K8S releases

- Update periodically the self-managed components (all of the above, at least, the more you add the more you need to check release notes and update with their own cadence)

- Ensure we maintain proper compatibility between components and K8S versions

- When there is an problem, open a bug upstream or better a Pull Request, wait for approval and then you can proceed with updating the impacted component.

The above is the basic hygiene of your infrastructure, without factor in additional effort for modernising the K8S realm as well.

#The Questions

Is it fu@*@! worth it?

Wouldn't be better to just off-load everything to the cloud provider and use the commodities the cloud provider offers for running the only thing we actually care about in our company that is the final workload?

Why don't we use ECS + Fargate? or Azure Containers or GCP Cloud Run? The underlying network and stuff, we still need to provision it in any case.

AWS, Azure, GCP, they already offer via their robust framework all the services we need. The main difference is that when you install a components on top of Kubernetes you don't have an SLA, it's managed by you and you need to come up as well with the proper maintenance, day-2 operations and upgrade patterns. And take care as well of the Kubernetes upgrades.

#The Bet

If you are a multi-cloud company (are you really?), I'm more and more convinced that it would be less costly and less frustrating (incidents-wise) to setup small cloud-experts teams to maintain simpler infrastructure, with reduced maintenance operations, and run the workload in each cloud in the vendor-locked in flavour.